News

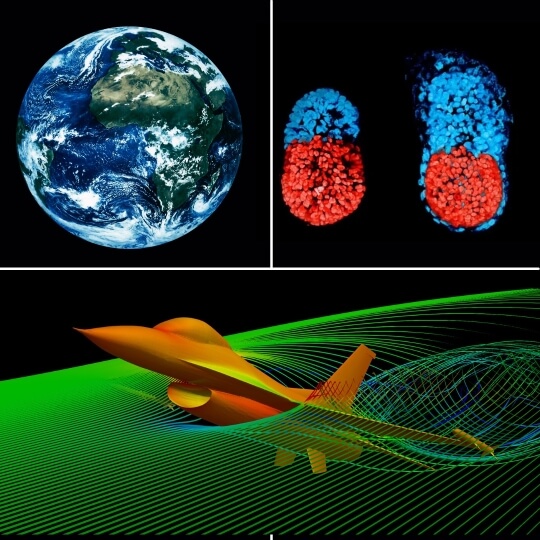

Research combines artificial intelligence and computational science for accurate and efficient simulations of complex systems, including climate systems, tissue morphogenesis and turbulence flows.

Predicting how climate and the environment will change over time or how air flows over an aircraft are too complex even for the most powerful supercomputers to solve. Scientists rely on models to fill in the gap between what they can simulate and what they need to predict. But, as every meteorologist knows, models often rely on partial or even faulty information which may lead to bad predictions.

Now, researchers from the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) are forming what they call “intelligent alloys”, combining the power of computational science with artificial intelligence to develop models that complement simulations to predict the evolution of science’s most complex systems.

In a paper published in Nature Communications, Petros Koumoutsakos, the Herbert S. Winokur, Jr. Professor of Engineering and Applied Sciences and co-author Jane Bae, a former postdoctoral fellow at the Institute of Applied Computational Science at SEAS, combined reinforcement learning with numerical methods to compute turbulent flows, one of the most complex processes in engineering.

Reinforcement learning algorithms are the machine equivalent to B.F. Skinner’s behavioral conditioning experiments. Skinner, the Edgar Pierce Professor of Psychology at Harvard from 1959 to 1974, famously trained pigeons to play ping pong by rewarding the avian competitor that could peck a ball past its opponent. The rewards reinforced strategies like cross-table shots that would often result in a point and a tasty treat.

In the intelligent alloys, the pigeons are replaced by machine learning algorithms (or agents) that learn by interacting with mathematical equations.

“We take an equation and play a game where the agent is learning to complete the parts of the equations that we cannot resolve,” said Bae, who is now an Assistant Professor at the California Institute of Technology. “The agents add information from the observations the computations can resolve and then they improve what the computation has done.”

“In many complex systems like turbulence flows, we know the equations, but we will never have the computational power to solve them accurately enough for engineering and climate applications,” said Koumoutsakos. “By using reinforcement learning, many agents can learn to complement state-of-the-art computational tools to solve the equations accurately.”

Using this process, the researchers were able to predict challenging turbulent flows interacting with solid walls, such as a turbine blade, more accurately than current methods.

“There is a huge range of applications because every engineering system from offshore wind turbines to energy systems uses models for the interaction of the flow with the device and we can use this multi-agent reinforcement idea to develop, augment and improve models,” said Bae.

There is a huge range of applications because every engineering system from offshore wind turbines to energy systems uses models for the interaction of the flow with the device and we can use this multi-agent reinforcement idea to develop, augment and improve models.

In a second paper, published in Nature Machine Intelligence, Koumoutsakos and his colleagues used machine learning algorithms to accelerate predictions in simulations of complex processes that take place over long periods of time. Take morphogenesis, the process of differentiating cells into tissues and organs. Understanding every step of morphogenesis is critical to understanding certain diseases and organ defects, but no computer is large enough to image and store every step of morphogenesis over months.

“If a process happens in a matter of seconds and you want to understand how it works, you need a camera that takes pictures in milliseconds,” said Koumoutsakos. “But if that process is part of a larger process that takes place over months or years, like morphogenesis, and you try to use a millisecond camera over that entire timescale, forget it — you run out of resources.”

Koumoutsakos and his team, which included researchers from ETH Zurich and MIT, demonstrated that AI could be used to generate reduced representations of fine-scale simulations (the equivalent of experimental images), compressing the information almost like zipping large files. The algorithms can then reverse the process, moving the reduced image back to its full state. Solving in the reduced representation is faster and uses far less energy resources than performing computations with the full state.

“The big question was, can we use limited instances of reduced representations to predict the full representations in the future,” Koumoutsakos said.

The answer was yes.

“Because the algorithms have been learning reduced representations that we know are true, they don’t need the full representation to generate a reduced representation for what comes next in the process,” said Pantelis Vlachas, a graduate student at SEAS and first author of the paper.

By using these algorithms, the researchers demonstrated that they can generate predictions thousands to a million times faster than it would take to run the simulations with full resolution. Because the algorithms have learned how to compress and decompress the information, they can then generate a full representation of the prediction, which can then be compared to experiments. The researchers demonstrated this approach on simulations of complex systems, including molecular processes and fluid mechanics.

“In one paper, we use AI to complement the simulations by building clever models. In the other paper, we use AI to accelerate simulations by several orders of magnitude. Next, we hope to explore how to combine these two. We call these methods Intelligent Alloys as the fusion can be stronger than each one of the parts. There is plenty of room for innovation in the space between AI and Computational Science.” said Koumoutsakos.

The Nature Machine Intelligence paper was co-authored by Georgios Arampatzis (Harvard/ETH Zurich) and Caroline Uhler (MIT).

Topics: AI / Machine Learning, Computer Science

Cutting-edge science delivered direct to your inbox.

Join the Harvard SEAS mailing list.

Scientist Profiles

Petros Koumoutsakos

Herbert S. Winokur, Jr. Professor of Computing in Science and Engineering

Press Contact

Leah Burrows | 617-496-1351 | lburrows@seas.harvard.edu